AI’s Ouroboros: the Risks of Iterative Degeneration

Share

As Artificial intelligence continues to evolve, at a pace that could arguably be described as alarming, the potentials for its use in international relations and diplomacy increase with every passing day. With added computing power, added interfaces, and additional modes of engagement, the scope of the technology continues to expand at a near exponential pace.

As AI becomes more familiar and more pervasive in industries across the world, and with the greater access to the technology afforded to both individuals and organizations, it is only a matter of time before its presence becomes ubiquitous within all but the most isolated of organizations operating in the field of international relations. It is an element of this ubiquotesness that we will explore today.

In our previous article on the use of AI in international relations, Machina Ex Diplomacy, we explored the potentially detrimental effects of overuse of AI throughout the international relations space, in both the governmental and intergovernmental contexts. In that piece, we looked at the concept of overreliance on AI by organizations and individuals using it as a substitute for rather than an augmentation of the acquired skills of professionals in the field, and how that process could lead to a potential deskilling of diplomats and professionals over time.

In this piece we will look at another angle, correlating how the combination of AI operational behavior and bureaucratic trends could combine to create a pattern of deteriorating quality of output that could have significant impact on international engagements, diplomatic and otherwise.

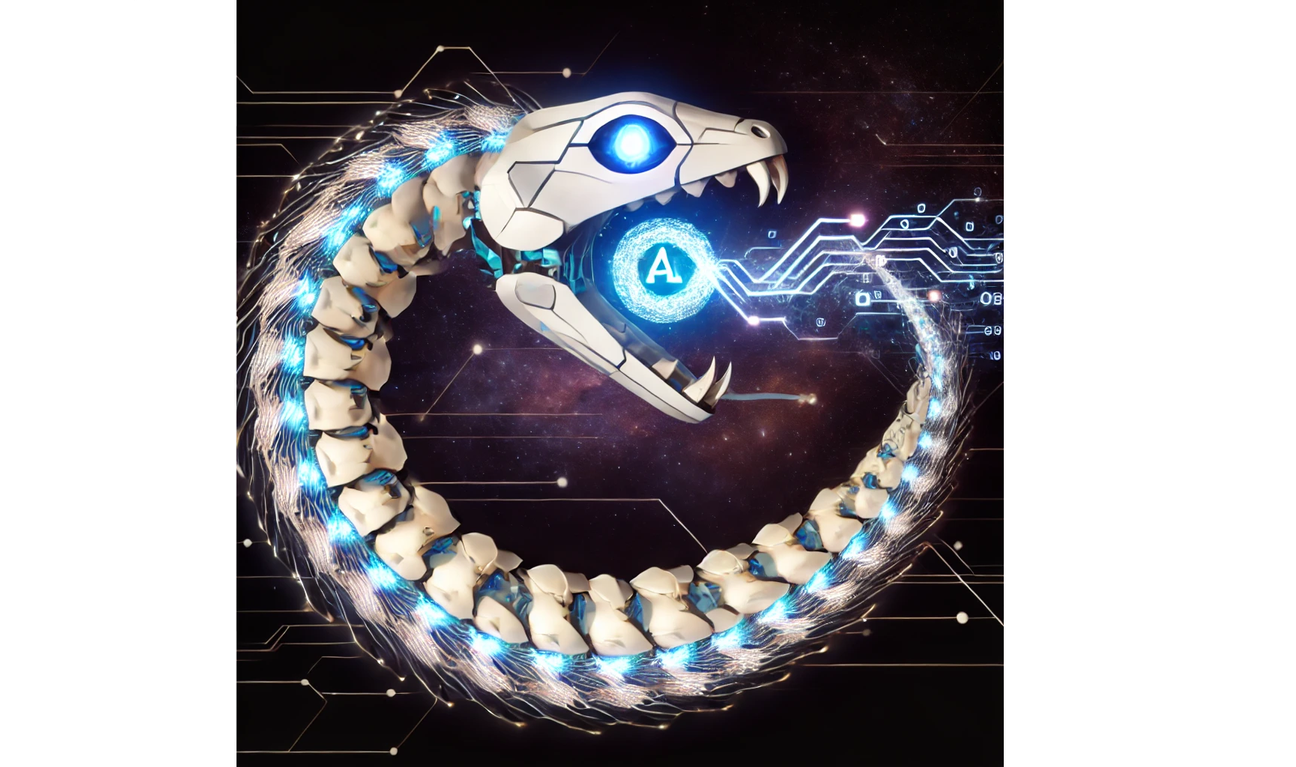

As the adoption of AI and its integration into operational systems of international organizations increases, we can expect to see an expanding scope of use beyond current operations. With expanded use, the outputs of individuals within organizations and the outputs of organizations themselves can also be expected to feature a greater prevalence of AI generated content. This partially synthetic (generated by AI) content will, in its own right, be used as input for AI -whether within an organization or externally-, initiating a cycle of self-consumption of content by AIs around the world, an AI Ouroboros feeding upon itself.

This piece will form a starting point in exploring the ramifications of this process on the dynamics of international relations and diplomacy including the possible deterioration in interactions as a result of this overuse, and why it is crucial at this early stage to develop guardrails to mitigate the negative impact of this issue.

Bureaucratic Pressures and the Allure of Efficiency

Organizations operating in international relations, whether governmental or intergovernmental, are often paradoxical in nature, needing to be dynamic, agile and adaptable, while navigating at the same time rigid bureaucratic structures imposed over generations of operation.

The output they need to generate, the analyses and the recommendations, need to respond to complex and variable circumstances. They need to identify, address, assess, and formulate policies for engagement. They need to adapt to rapidly changing situations, and evolve their positions accordingly. The professionals working to produce those outputs are often doing so while navigating intraorganizational dynamics and internal politics that consume their energy and focus.

Enter AI, providing a way to process and assimilate copious amounts of information in short order, and providing recommendations and summaries in their regard. It can help translate documents, assist in their summarization, correct and amend reports, streamline documents, and even draft correspondence, or other documents. Early adopting organizations like the US department of State are already experimenting and exploring the ways that this technology can be leveraged to streamline operations.

Once the hurdle of reluctance to adopt is overcome, and experimentation and familiarity with the technology increases, the bureaucratic culture of organizations like the Department of State and its counterparts the world over will likely see their staff figuring out creative ways to use AI to enhance their productivity and output.

AI's siren call of making tedious, repetitive, and complex processes simpler and more streamlined may be difficult to resist for even the most seasoned professionals in the field. The allure of automating repetitive tasks, sifting through mountains of data, and even performing sophisticated analyses may prove enticing enough to relegate as many tasks as possible to AI. Without restraint however, this process may contain the seeds of its own destruction.

Intra-organizational Competition

Within both governmental and intergovernmental organizations operating in the international relations industry, competition is fierce. Competition for promotions, better postings, better positions, or even more prominent titles are a feature of the industry. To that end, and because the institutional systems are more often than not zero sum games, there is considerable motivation to find ways to outperform and outproduce the person one office over.

With the advent of AI, and with the adoption of its use within organizations, the logical move by personnel will be to explore just how much use they can get out of the technology to give them an edge over their peers. If they can process data faster, come to conclusions more efficiently, and produce reports and recommendations within tighter deadlines, they can seize the advantage and reap the rewards.

As in all highly competitive settings, the astute move is to assume that competitors will be using every tool at their disposal to ensure better positioning. In that case, the competitive dynamics between operators within organizations can lead to extensive use of AI to push the limits of generating required outputs and accelerate the pace of production.

AI’s capacity to enhance speed, accuracy, and efficiency will make it indispensable. Early adopters within organizations will gain an advantage over their competitors, and others can be expected to scramble to keep up. This race toward efficiency in output leads in the direction of overuse of AI.

As AI's prevalence within organizations grows, output featuring synthetic content is also likely increase. Reports, recommendations, and communications—once shaped exclusively by human minds—will increasingly incorporate the product of AI-generated processes.

As these outputs seep through the organizations’ internal documentation, and their institutional memories, they will begin forming layers of output that more prominently feature AI. This output, naturally, will feed into the cycle of internal communication and increasingly make up part of the input for other AI, which will then use it as input to generate its own output. This will likely form the first stage of the self-consuming cycle of the AI Ouroboros.

Increasing Prevalence of AI in Inter-organizational Communication

With increasing use of AI on intraorganizational levels, the next iteration is the communication between organizations. As they engage with others in the field, those with AI layers embedded within their organizational processes will feature increased presence of AI generated output. Think of Ukraine’s ministry of foreign affairs AI spokesperson Victoria Shi as an example. While Victoria is simply a rendered model that reads prepared statements, it is a starting point for AI interaction with the external world.

With more extensive use, AI generated output may feature more frequently in outputs communicated to other organizations. As AI becomes more commonly used for things like recurring correspondence, and increasingly features in reports and documentation communicated extra-organizationally, it will gradually form new layers of output in the collective global institutional memory.

In the same vein as the competition between individuals, organizations -governmental and intergovernmental- will be driven to use the technology more frequently. As in the case of individuals, competitiveness in the field of international relations is fierce. Being the first to identify to an opportunity or imminent threat and respond to it provides considerable advantages. Institutions that adopt AI early can therefore expect to gain competitive advantages, prompting others to accelerate their own integration of the technology, accelerating the cycle of use with every iteration. This forms the next stage of the AI Ouroboros.

The Ouroboros: AI Consuming Itself

The AI Ouroboros could have a significantly more impact than may be immediately apparent at this early stage, but one that nevertheless needs to be both addressed and preempted.

A joint study by Rice University and Stanford University shows that through the process of what they termed “Model Autophagy Disorder”, AI consuming synthetic data results in progressive degeneration of precision, quality, and diversity of output. They performed the study using three types of data, fully synthetic (generated entirely by AI), synthetic augmentation (partially generated by AI), and fresh data loop (data generated without the use of AI). The most rapid decline was through the fully synthetic loop, where all the input given to the AI was generated artificially, followed by the partially synthetic loop, and then the data devoid of AI.

This study hits on a very important and relevant issue when it comes to the topic at hand: the progressive inclusion of synthetic inputs leads to deteriorating quality of output over iterative cycles. This is a matter that, given the nature of an industry that relies on processing large amounts of information under tight deadlines, should become a concern in the near future.

The results of the study highlight a need on the part of organizations to take this process of deterioration of output into account. It showed that not only does the quality of the output degenerate in general terms, but that there are risks of rapidly evolving echo chambers and bias amplification over a cycle of iterations. The implication here is that, given that the cycles are expected to accelerate, the echo chambers and biases could be expected to magnify rapidly within organizations, diverting organizational (and by consequence) governmental positions toward sub optimal conclusions.

Complicating this issue further is that if enough organizations fall into this pattern, the compound effects of the biases and echo chambers could result in a tipping point of deteriorating engagement on the international level between, both between governments as well as intergovernmental organizations.

This potential degradation of output could become especially risky in interactions on the international stage. If enough organizations adopt these tools and integrate them without understanding the scope of the risks associated with the degenerative nature of AI output that feeds on even partially synthetic data, communications could lose nuance, diversity, and depth and result in an even more precarious global situation.

The Contamination of Diplomatic Discourse

The risk posed by a potential AI Ouroboros or autophagy dominating intergovernmental communications should not be underestimated. Consider an organization like the EU, that has existing operational biases toward Russia for example. The prevalence of AI throughout the organization’s operation would, if generated partially through AI, lean into the existing biases and amplify them.

The resultant recommendations and the subsequent actions confirm the biases, which are then fed into the system again creating a reverberating echo chamber of biases and self-confirmation that leads in one direction to the exclusion of other more creative solutions.

If we inject into that example Russia’s use of AI as well, with its own set of operational biases toward Europe, the interactions between the two sides with amplified biases and echo chambers will escalate far more rapidly towards adversarialism, and leave little to no leeway for course corrections that would have been available without the over reliance on AI.

While the example adopts reductio ad absurdum for simplicity’s sake (both the EU and Russia have well versed personnel on hand to identify trends and patterns), the core concept remains applicable over time; it would not happen overnight but rather through a process of gradual magnification of biases and amplification of the respective echo chambers to reach a point where reliance on AI could effectively hamstring organizational agility and creativity.

Stripping diplomacy of its human element through the progressive over reliance on AI could lead the international order to an abyss of global machine interactions geared intractably toward chaos.

Preempting the Ouroboros

The aforementioned study shows that both fully synthetic and partially synthetic loops exhibit issues of degenerating quality, albeit at different paces. While it would be unrealistic to suggest that governmental and intergovernmental organizations ensure that AI is fed only fresh data, -the prevalence of AI generated data is already showing a rise across the board of industries worldwide and diplomacy is no exception- it is important that organizations operating in this sphere adopt mechanisms of self-correction to mitigate the effects of the deterioration of output.

While developers are already working on technical solutions to address the risks of AI's iterative degeneration, the bureaucratic response may lag behind. The study itself states the input of enough fresh data into the inputs of AI at every cycle can mitigate the deterioration of quality of the output.

This means that organizations can mitigate the potential downturns by consciously adopting enforceable and trackable guidelines within their operational structures that ensure that the data used to feed AI incorporates enough fresh data at every cycle to maintain the quality of the output.

For this to materialize however, organizations and institutions need to recognize the risks posed by the deterioration of output. It then requires proactive engagement on their part to identify the percentage of fresh data required to mitigate the degradation of output, while in parallel requiring enough self-awareness to identify organizational biases present within their structures. One of the main challenges with this approach is that it requires an investment of time and effort to identify the risks, in addition to developing the necessary oversight and implementation mechanisms.

On the external level, optimizing the process of using AI requires identifying synthetically produced data received from other organizations that may not have gone through the same rigorous processes. Identifying synthetic content and separating it from other forms of communication can help both train the organizations' own AI, and ensure that the resultant analyses and recommendations are not in their own turn skewed.

As AI’s technical aspects evolve, bureaucracies operating in the realm of international affairs cannot afford to remain complacent and rely solely on the technical corrections that will be offered by developers. On both the governmental and intergovernmental levels, organizations and institutions should seek to develop practices and mechanisms geared toward mitigating the risks associated with both internal and external deterioration of output as a result of overuse of AI.

The already precariously positioned international arena cannot afford deskilling of diplomats and professionals, and should avoid relegating analysis and decisions to AI in the absence of the nuance, intuition, and ethical considerations provided professionals in the field.

AI holds vast potential for transforming global diplomacy, but if left unchecked, it risks becoming an Ouroboros—feeding on itself and progressively undermining the very structures it was meant to enhance. To preempt that, active measures should ideally be adopted at these early stages; delaying the adoption of these mechanisms may result in trying to catch up to a rapidly evolving technology that has already showed itself capable of outpacing anything that came before it.